Abstract

Method

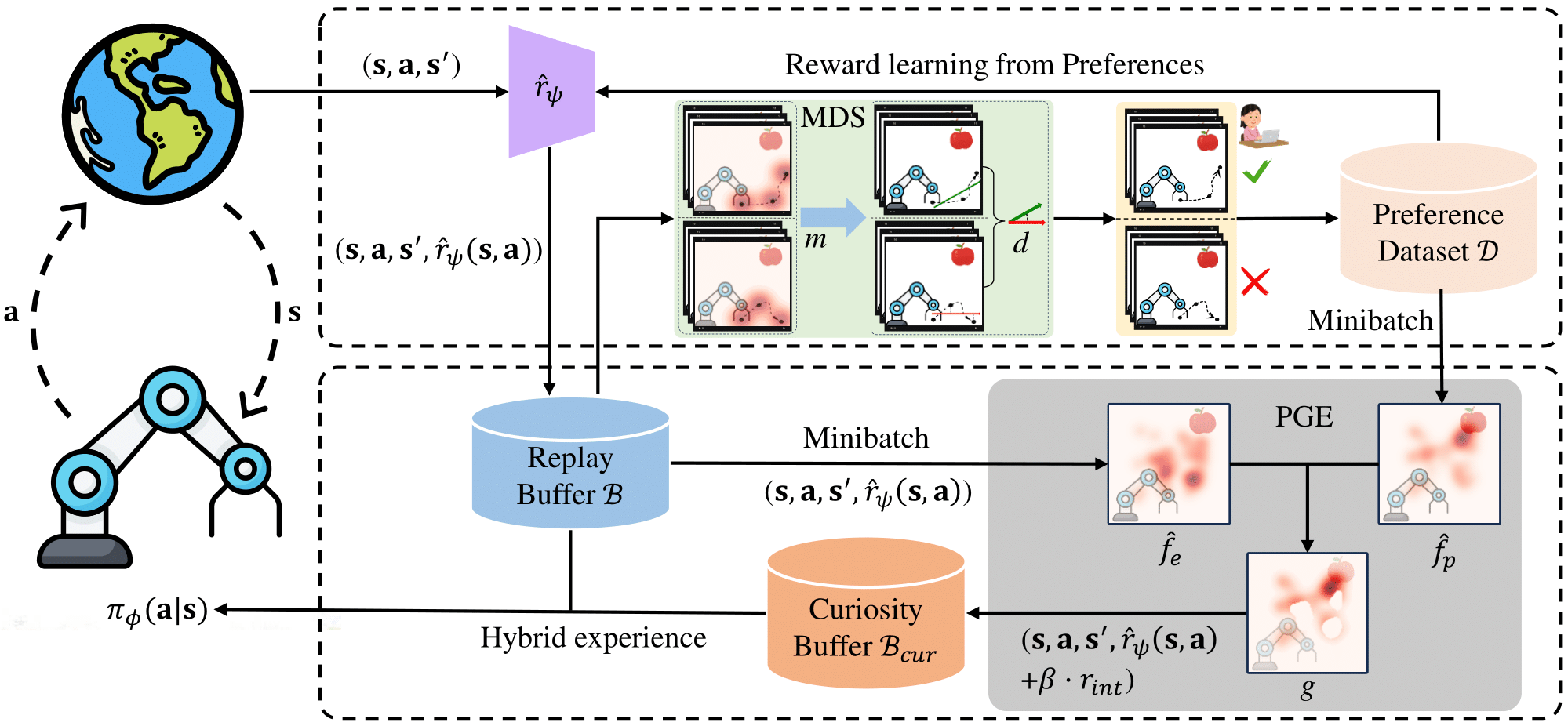

Figure 1: Illustration of SENIOR. PGE assigns high task rewards for fewer visits and human-preferred states to encourage efficient exploration through hybrid experience updating policy, which will provide query selection for more valuable task-relevant segments. MDS select easily comparable and meaningful segment pairs with apparent motion distinction for high-quality labels to facilitate reward learning, providing the agent with accurate rewards guidance for PGE exploration. During training, MDS and PGE interact and complement each other, improving both feedback- and exploration-efficiency of PbRL.